My father liked to order things off the menu he had never eaten and then cheerfully attempt to get his children to try them. This is a cheerful kind of cruelty that I have inherited. We often told the story in later years of the time we ordered sea urchins in a dingy chinese restaurant in New York. The consensus was that the sea urchins weren’t actually dead when they got to the table. They would go in your mouth and when they discovered you were attempting to chew on them they would quickly flee to the other side of your mouth. Being a very low life form once you had succeeded in biting them in two your reward was two panic sea urchins in your mouth.

I’m reminded of this story by the fun people are having with neologisms these days. For example blog, or folksonomy. A neologism is rarely a very highly evolved creature, which makes it hard to pin down. But there in lies the fun. You can have entire conferences about a single word because collectively nobody really knows what the word means. These littoral zones are full of odd creatures. The tide of Moore’s law and his friends keeps rising. The cheerfully cruel keep finding things to order off the menu.

But, before I got taken prisoner by that nostalgic reminding, what I want to say something about is this definition of ontology that Clay posted this morning.

The definition of ontology I’m referring to is derived more from AI than philosophy: a formal, explicit specification of a shared conceptualization. (Other glosses of this AI-flavored view can be found using Google for define:ontology.) It is that view that I am objecting to.

Now I don’t want to get drawn into the fun that Clays having – bear baiting the information sciences.

What I do want to do is point out that the function of these “explicit specifications of a shared conceptualization” is not just to provide a an solid outcome to the fun-for-all neologism game.

The purpose of these labors is to create shared conceptualizations, explicit specifications, that enable a more casual acts of exchange between parties. The labor to create an ontology isn’t navel gazing. It isn’t about ordering books on the library shelves. It isn’t about stuffing your books worth of knowledge, a tasty chicken salad between two dry crusts – the table of contents and the index.

It’s about enabling a commerce of transactions that take place upon that ontology. Thus a system of weights and measures is an ontology over the problem of measurement and enables exchanges to take place without having to negotiate from scratch each time, probably with the help of lawyer, the meaning of a cord each time you order fire wood. And, weights and measures are only the tip of the iceberg provide the foundation for efficient commerce.

But it’s not just commerce, ontology provide the vocabulary that enables one to describe the weather all over the planet and in turn predict tomorrow’s snow storm. It provides the the opportunity to notice the planet is getting warmer and to decide that – holly crap – it’s true!

To rail against ontology is to rail against both the scientific method and modern capitalism. That’s not a little sand castle on the beach soon to be rolled over by the rising tide. Unlike say Journalism v.s. blogs, it’s not a institution who’s distribution channel is being disintermediated by Mr. Moore and his friends. Those two are very big sand castles. They will be, they are being, reshaped by these processes but they will still stand when it’s over.

What really caught my attention in Clay’s quote was “shared conceptualizations.” Why? Because sharing, is to me, an arc in a graph; and that means network, which means network effect, which means we can start to talk about Reed’s law; power-laws, etc. It implies that each ontology forms a group in the network of actors. To worry about big, durable, or well founded these groups are it to miss the point of what’s happening. It’s the quantity, once again, that counts.

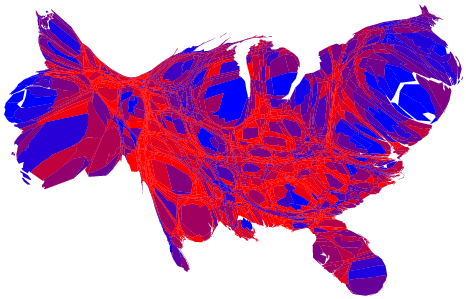

What we are seeing around what is currently labeled as folksonomy is a bloom of tiny groups that have rendezvous around some primitive ontology. For example consider this group at flickr. A small group of people stitching together a quilt. Each one creating a square. They are going to auction it on eBay to raise funds for tsunami victims. For a while they have taken ownership of the word quilt.

What’s not to like? The kinds of ontology that is emerging in examples like that is smaller than your classic ontologies. These are not likely to predict global warming, but they are certainly heart warming.

This is the long tail story from another angle. The huge numbers, excruciating mind boggling diversity, billions and billions of tiny effects that sum up to something huge.

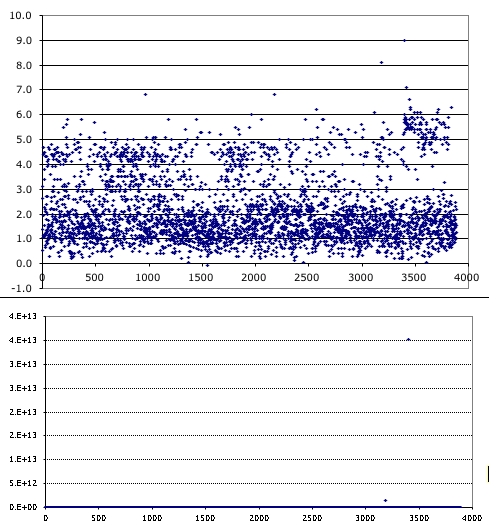

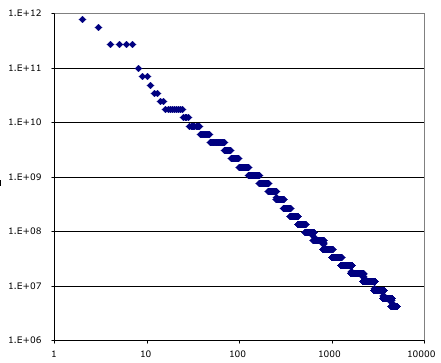

The chart on the right shows one point for each of the top five thouand earthquakes in the united states during the year 2000. The largest was a magnitude 8 and appears in the upper left and is plotted as rank one on the horizontal axis (well, it would if I hadn’t dropped the top point, so the second largest is in the upper left). The smaller the earth quake the larger it’s rank order on the horizontal. The vertical axis is the energy released by the earthquake, estimated from the reported magnitude. This grainy look of the plot is because the magnitude was reported using only two digits. The data is from

The chart on the right shows one point for each of the top five thouand earthquakes in the united states during the year 2000. The largest was a magnitude 8 and appears in the upper left and is plotted as rank one on the horizontal axis (well, it would if I hadn’t dropped the top point, so the second largest is in the upper left). The smaller the earth quake the larger it’s rank order on the horizontal. The vertical axis is the energy released by the earthquake, estimated from the reported magnitude. This grainy look of the plot is because the magnitude was reported using only two digits. The data is from  Here’s a fine critique of a common kind of delusion that arises when people think about the nature of the long tail. This is the intro to a New Yorker music review.

Here’s a fine critique of a common kind of delusion that arises when people think about the nature of the long tail. This is the intro to a New Yorker music review.