Stefano brought N3, or Notation 3, to my attention – it’s a good thing!

I hate XML. It’s a mess. Wrong on so many levels. But getting the industry to switch is now nearly impossible. XML has played almost all the cards you can to make a standard sticky and lock-in users. For example it’s extreme complexity. Just like that of the Window’s API. That makes people extremely reluctant to abandon their sunk costs after learning it.

That said I keep hoping that something better will emerge.

One possibility is that something will emerge that provides overwhelmingly improved UI. Making users smile can be a powerful force for change. So cool UI might be the lever. Things like SVG and Lazslo are examples of folks making a run for this exit. On a lesser scale the evolution of HTML/Javascript just keeps on hill climbing.

A less plausible possibility is that we will all rendezvous around one standard from for storing our documents, our data. This idea has been a fantasy of the ivory tower crowd since beginning of time. There has been a cool up and coming normal form at every stage of my life. I still have some stacks of punch cards. I wrote programs to convert IDL databases into suites of prolog assertions. I can’t count the number of times I’ve forced some data structure to lie down in a relational database or a flat file that sort/awk/perl would swallow.

After a while one becomes inured to hearing the same tune, the old enthusiasm returned again new clothes. You wish them the very best of luck because of course you remember how hopeful one once was that this could all come together. As a result I’ve have been treating the Semantic Web work with a kind of bemused old fart’s detachment.

But! Step past all the self indulgent ivory tower talk of one world data model. Skip the seminar on how soon we will compute the answer to everything. You don’t need to know from reification, or Mereological Ontological.

This stuff is actually useful.

Look at this:

:dirkx foaf:based_near [

a geo:Point;

geo:lat "52.1563666666667";

geo:long "4.48888333333333" ].

That tells us where something named here as :dirkx is lives. That’s it! No wasted tokens. Easy to parse. That’s why I like N3. Easy for humans, easy for computers. XML is neither.

<foaf:based_near>

<geo:Point rdf:nodeID="leiden">

<geo:lat>52.1563666666667</geo:lat>

<geo:long>4.48888333333333</geo:long>

</geo:Point>

</foaf:based_near>

XML is good if your getting paid by the byte.

I’d love to see XML displaced by something more practical. Something that lowers the barriers to entry so that you don’t need a few meg of code just to fool around. I believe that XML’s complexity is good for large players and bad for small players. I want to see the small players having fun – and frustrating the large players.

N3 is neat, but what about RDF?

RDF is just fine. You need to clear way all the overly serious ivory tower presentation. get past all that righteous marshmallow fluff and down at the core you find a nutritious center of practical and simple useful good stuff. How nice! It’s going to drive the marshmallow men in their tower crazy if this thing takes off!

When I look at RDF I notice two critical things. It is much easier to stack up new standards in RDF than it is in XML. Look the example above. It’s using a standard called geo and one called foaf. Click on those links to see how straight forward they are.

In XML if you want to define a new standard it takes extremely talented labor. Only a handful of people can both understand XML Schema and think in practical terms about real world problems. In RDF any fool can do it; which means that anybody with some data to move can get on board without having to go thru some gauntlet of heavy industry standardization.

The second thing I notice about RDF is actually a blind spot.

Above I mentioned two ways that XML might get displaced. One on the supply side (data normal form) and one on the demand side (presentation). There is a third – data exchange. Exchange is where the powerful network effects are – always! RDF is better for data exchange than XML. It’s easier, it’s simpler, and it is far better for layering, mixing, and creating new standards.

This trio, and the standards around them, are key to making anything happen in the net.

- Supply: writers, data, storage, normal forms, etc.

- Demand: readers, presentations, ui, etc.

- Exchange: messaging, subscriptions, polling, push, etc.

The RDF folks think that the demand side is where the leverage is. That’s wrong. Content is not king! Exchange is king. In the world of ends, the middle rules.

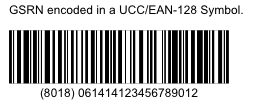

This power adaptor claims to be very conformant.

This power adaptor claims to be very conformant. If I had a EAN.UCC Company Prefix of my very own (only $750

If I had a EAN.UCC Company Prefix of my very own (only $750