Let me take a stab at solving the second of the two large problems I

see with the current TypeKey design.

The Problem

In it’s most brutal form the second problem with TypeKey is that it is

a land grab. It puts Six Apart in the position, intentionally or not,

of making exactly the same mistake that Microsoft made with Passport.

The design, presumably for simplicity, assumes that there is one

authority that everybody using TypeKey will turn to for their

authentication services; i.e. www.typekey.com. That makes

typekey.com the central authority for some universe of

authentication. Today this is blog comments. Tommorrow it might be

wiki contributions. The next day – who knows?

While at first blush this appears to be very valuable turf to grab, if

you are too greedy you destroy the value of the turf your getting.

That’s the lesson that Microsoft hopefully has learned from Passport.

Sure, you can build a system with a single central authority. Yes,

people will sign up for it. Trouble is then force other serious players

into a subordinate position. Other powerful players don’t like that.

They have no interest playing a subordinate role to you.

The second problem with having a single central authority is that it

encourages the emergance of a monopoly. While I may think very highly

of the folks at Six Apart, I don’t think so highly of them as to

believe they should be encouraged to grab a dominate role in teh

authentication of contributors of open/free content.

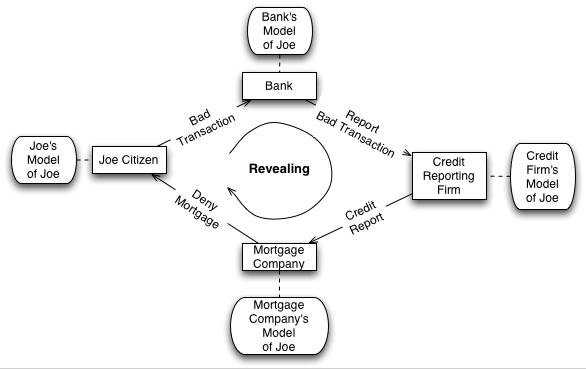

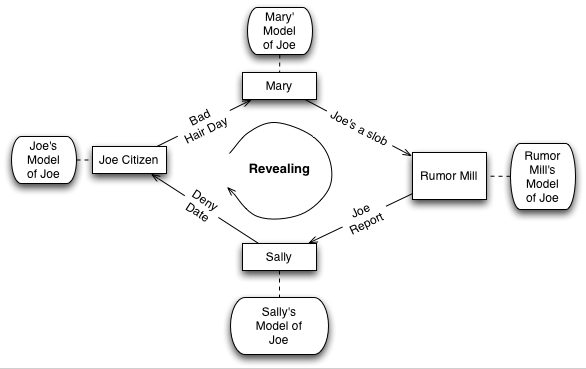

Solving the problem, technically, isn’t that hard. It is harder

than solving the design flaw of revealing a global unique identifier

for everybody, though.

A Solution

What is required?

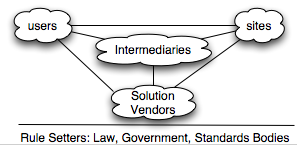

Users and sites need to be able to sign up for with multiple

authentication services.

The more the merrier. In fact if you design with the presumption that

there will be a few hundred or thousands of these “authorities” that

would be best.

The TypeKey design bounces the user over to the single central authority.

In a design that avoids a single central authority the site needs to

infer one or more authorities to bounce the user over to.

The key added complexity is getting a list of these authorities before

we bounce the user over to them for authentication. This take two steps.

The simpler TypeKey design requires only one. First we lookup the

user’s perfered authorities. Secondly we ask one or more of them to

authenticate/vouch for the user.

A Possible Implementation

How to lookup the user’s perferred list of authorities?

There are lots of fanciful ideas for how to do this – most are not

practical. We might modify the installed base of web browsers so that

users could send their prefered set of authorities as part of their

browsing. We might have the user run thru a proxy server provided by

his ISP and that proxy server could insert the list of perfered

authorities.

There are two reasonably practical approaches.

First we could introduce a central authority who’s only role is to

return the user’s list of perfered authorities. The TypeKey folks

could volunteer to do that, in effect offering to redirect queries

about a given user to other authorities if that user has asked for

that.

Alternately we could use tricks involving browser cookies. Each site

would then use these cookies to get the user’s authority list. This

solution is somewhat better, at least it’s faster, than the first

solution. It has a similar design challenge that somebody would

have to manage the domain used to hold the cookies shared over all

these sites.

Neither of these solutions is too hard to implement. Both solve the

problem of enabling a single dominate vendor for the role that TypeKey

is working to fill.

One final point. I don’t believe that introducing the mechinisms will

reduce the success of TypeKey as a major player in the blog

authentication industry. In fact I suspect that making changes along

these lines will accellerate adoption because it will reduce the

paranoia that is created by making the role of authentication server

scarce.