While doing a bit of work helping The Echo Nest get their developer network rolling I got to observe an amazing outrageously cool example of what can happen when you open up your technology.

This bends one of my blogging rules: no blogging about the job. This time it’s a consulting client. But the gig is all done and I’m not revealing anything proprietary.

I treasure examples of why relinquishing control of your technology is a good move. Because bewilderment is often the first reaction when I suggest it. And then, most technology owners don’t seem to like the explanation, which seems straight forward to me.

My favorite answer for why this can work: searching for cool applications demands skills and attitudes that the firm lacks. These are on the demand side. They are close to the problem the user needs to solve. I love this answer because it’s symmetric – scarcity on both sides. The firm should not horde it’s options because the knowledge to act on those options is scare.

You can frame this answer as a search problem. Searching the option space created by the new technology requires all the usual stuff: capital, talent, knowledge, an appetite for risk, and intimacy with a high value problem. Delegating the search problem to the third parties works well because they bring increased knowledge, because they understand the problem being solved. The firm only understands the technology being applied. The developer in your developer network brings a heightened appetite to solve the problem, because it’s their problem. It is perversely fun to note that the 3rd party will take risks the firm would never take; they might be small, foolish, impulsive, or very large and self-insured.

This isn’t the only workable model for a developer network (there are, just to mention three: commoditizing, standardizing, and lead generating models).

But if this is the model your using you can begin to set expectations. A successful developer network must create surprises. If the search created by the developer network does not turn up some surprising applications of your technology it’s probably not working yet.

When it works the open invitation to use your technology creates a stream of surprises. Expect to be bewildered. Curiously the somewhat bewildering decision to relinquish control, if successful, leads to yet more bewilderment. But, surprise comes in many flavors. You may be envious because the third party discovers some extremely profitable application of your tech, as Microsoft was when the spreadsheet and word process emerged in their developer network. You maybe offended, as some of us in Apache were when violent or pornographic web sites emerged in the user base. You maybe disappointed, as I was when the market research showed that most spreadsheets had no calculations in them. You are often delighted as I suspect the iPhone folks were when this somebody invented this wind instrument based on blowing on phone’s microphone.

Dealing with the innovations created in the developer network can be quite distracting. It’s in the nature, since the best of them take place outside the core skills of the firm. That means that comprehending what they imply is hard. Because of that I seem to have developed a reflect that treasured these WTF moments.

So, One aspect of managing a developer network is digesting the surprises. To over simplify there are two things the developers bring to your network: a willingness to take risks, and domain expertise. The first means that you often think, golly that’s seems rash, foolhardy, and irresponsible.

Consider an example. It is very common to observe a developer building a truly horrible contraption. They use bad tools, in stupid ways, even dangerous, ways. And just as your thinking “oh dear” they get a big grin on contented face. If that happens inside an engineering team you’d likely take the guy aside to discuss the importance of craftsmanship. Or, if you’re a bit wiser, you might move him into sales engineering. That kind of behavior is not bewildering; it’s a sign of somebody solving a problem, creating value. Value today, not tomorrow. It’s a sign of an intense need. Now intense need is not enough to signal a high value product opportunity, for that you also want the need to be wide spread. Once you get over yourself, and learn to appreciate the foolhardy, you can start to see that it is actually a good sign.

But developers don’t just bring a willingness to take risks. They can also bring scarce knowledge that you don’t have. I love these because it’s like meeting somebody at a party who’s an expert in some esoteric art you know nothing about. It’s a trip to a foreign country. It’s the best kind of customer contact – they aren’t telling you about their problems they are revealing intimate information about how to deal with those problems. Like travel to a foreign land it is, again, bewildering.

We caught one of these last fall at The Echo Nest. I love it because it is so entirely off in left field.

The folks at The Echo Nest have pulled together a bundle of technology that knows a lot about the world of music. They have given open access to portion of that technology in the form of a set of web APIs. So they have a developer network. They have breadth of music knowledge because their tools read everything on the web that people are saying about the world of music. They have in depth understanding by virtue of software that listens to music and extracts rich descriptive features about individual pieces. It is all cool.

The surprise?

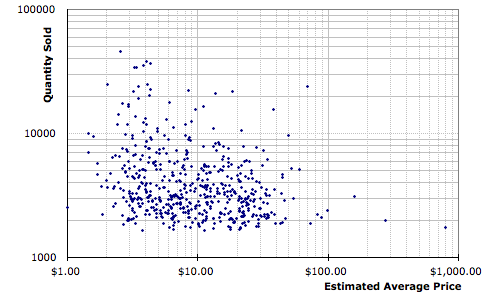

Last fall Phipip Maynin (a Libertarian, a theoretical finance guy, a hedge fund manager, and one of the developers in The Echo Nest developer network) figured out how to use the APIs to guide stock market investments. How bewildering it that! He started with a time series – hit songs – and ran the music analysis software on that series. He then gleaned out correlations between the features of those songs and market behavior. He reports that work in this paper: Music and the Market: Song and Stock Volatility.

It is a perfect example of how the developers in your network bring unique talents to the party. I doubt that anybody at The Echo Nest would have thought of it.

I often get asked were the money is in giving away your technology in some semi-open system. The question presumes that hording the options the technology creates is the safest way to milk the value out them. If you start from that presumption it’s a long march to see that other approaches might generate value. What I love about this example is how it is a delightful value counter point to the greed implicit in that hording instinct. What’s a more pure value generator than market trading scheme?

Of course now I’m curious: anybody got any examples of trading schemes based on the iPhones, Facebook, or Romba platforms?