I see that Sun has set up an Open Source Office in a further attempt to bring some coherence to their strategy and tactics for relating to the open source phenomenon.

This kind of activity can be viewed from different frames. I, for example, haven’t the qualifications to view it thru the Java frame. But let me comment on it from two frames I think I understand pretty well.

Sun has done some reasonably clever standards moves over the years. As a technology/platform vendor the right way to play the standards game is to use it as a means to bring large risk adverse buyers to the table. Once you got them there you then work cooperatively with them to lower thier risks and increase your ablity to sell them solutions. Since one risk the buyers care about is vendor lock-in (and the anti-trust laws are always in the background) the standards worked out by these groups are tend to be reasonably open. Standards shape and create markets. Open enables vendor competition.

This process is used to create new markets, and from the point of view of the technology vendor that requires solving two problems. First and foremost it creates a design that meets the needs of the deep pocket risk adverse buyers. Secondly it creates a market inside of which the competition is reasonably collegial. The new market to emerges when you get the risk percieved by all parties below some threshold.

Open source created a new venue, another table, where standards could be negotiated. Who shows up at this table has tended to be different folsk with different concerns. That’s good and bad.

The open source model works if what comes out of the process is highly attractive to developers (i.e. it creates oportunities for them) and the work creates a sufficently exciting platform that a broad spectrum of users show up to work collegially in common cause to nurture it.

The goals of the two techniques are sufficently different that both approachs can use the word open while meaning very different things. It has been very difficult for Sun to get that. For example the large buyer, risk reducing, collegial market creating standards approach talks about a thing called “the reference implementation” and is entirely comfortable if that’s written in Lisp. The small innovator, option creating, collegial common cause creating standards approach talks about the code base and is only interested in how useful as feedstock for the product they are deploying yesterday.

It’s nice to see that Sun has created an Open Source Office; it’s a further step in coming to terms with this shift in how standards are written and the terms that define the market are negotiated. But, my immediate reaction was: “Where’s the C?” as in CTO, or CIO, etc.

What does the future hold. Will firms come to have a Chief level officer who’s responsible for managing the complex liason relationships that are implicit in both those models of how standards are negotiated? I think so. This seems likely to become as key a class of strategic problems as buisness development, marketing, technology, information systems, etc.

Open source changes the relationship between software buyers and sellers. It has moved some of the power from firm owners and managers down and toward the software’s makers and users. But far more interestingly it has changed the complexity of the relationship. The relationship is less at arms length, less contractual, and more social, collaborative, and tedious.

This role hasn’t found a home in most organizations. On the buyer side it tends to be situated as a minor subplot of the CTO’s job; while of course the CIO ought to be doing some as well. On the seller side it’s sometimes part of business development or marketing even. That this role doesn’t even exist in most organizations is a significant barrier to tapping into the value that comes of creating higher bandwidth relationships on the links in the supply chain.

This isn’t an arguement about what the right answer is because the answer is obvious some of both models. Some software will be sold in tight alignment with carefully crafted specifications and CIOs will labor tirelessly to supress any deviance from those specs. Some will be passed around in always moving piles of code where developers and users will both customize and refactor platforms in a continous dialog about what is effective. The argument here is about how firms are going to evolve to manage the stuff in the second catagory. That’s not about managing risk, that’s about creating, tapping, collaboratively nurturing opportunities.

This is a note about how to save a few hundred dollars on your Verizon cellphone bill, and why you should seriously consider switching from a BSD or old Apache style license to the new cooler Apache 2.0 license.

This is a note about how to save a few hundred dollars on your Verizon cellphone bill, and why you should seriously consider switching from a BSD or old Apache style license to the new cooler Apache 2.0 license. One obvious way to solve the problem is to have the producer ship the content directly to all N consumers. He pays for N units of outbound bandwidth and each of his consumers pays for 1 unit of inbound bandwidth. The total cost to get the message out is then 2*N. Of course I’m assuming inbound and outbound bandwidth costs are identical. If we assume that point to point message passing is all we’ve got, i.e. no broadcast, then 2*N is the minimal overall cost to get the content distributed.

One obvious way to solve the problem is to have the producer ship the content directly to all N consumers. He pays for N units of outbound bandwidth and each of his consumers pays for 1 unit of inbound bandwidth. The total cost to get the message out is then 2*N. Of course I’m assuming inbound and outbound bandwidth costs are identical. If we assume that point to point message passing is all we’ve got, i.e. no broadcast, then 2*N is the minimal overall cost to get the content distributed. It’s not hard to imagine solutions where the consumers do more of the coordination, the cost is split more equitably, the producer’s cost plummet, and the whole system is substantially more fragile. For example we can just line the consumers up in a row and have them bucket brigade the content. We still have N links, and we still have a total coast of 2*N, but most of the consumers are now paying for 2 units of bandwidth; one to consume the content and one to pass it on. In this scheme the producer lucks out and has to pay for only one unit of band width, as does the last consumer in the chain. This scheme is obviously very fragile. A design like this minimizes the chance of coordination expertise condensing so it will likely remain of poor quality and high cost. Control over the relationships is very diffuse.

It’s not hard to imagine solutions where the consumers do more of the coordination, the cost is split more equitably, the producer’s cost plummet, and the whole system is substantially more fragile. For example we can just line the consumers up in a row and have them bucket brigade the content. We still have N links, and we still have a total coast of 2*N, but most of the consumers are now paying for 2 units of bandwidth; one to consume the content and one to pass it on. In this scheme the producer lucks out and has to pay for only one unit of band width, as does the last consumer in the chain. This scheme is obviously very fragile. A design like this minimizes the chance of coordination expertise condensing so it will likely remain of poor quality and high cost. Control over the relationships is very diffuse. We can solve the distribution problem by adding a middleman. The producer hands his content to the middleman (adding one more link) and the middleman hands the content off to the consumers. This market architecture has N+1 links or a total cost of this scheme is 2*(N+1). Since the middleman can server multiple producers the chance for coordination expertise to condense is generally higher in this scenario. Everybody, except the middleman, see their costs drop to 1. Assuming the producer doesn’t mind being intermediated he has incentive to shift to this model. His bandwidth costs drop from N to 1, and he doesn’t have to become an expert on coordinating distribution. The middleman becomes a powerful force in the market. That’s a risk for the producers and the consumers.

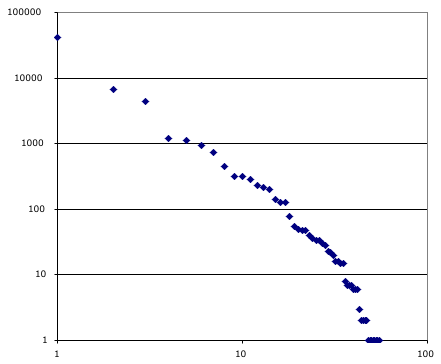

We can solve the distribution problem by adding a middleman. The producer hands his content to the middleman (adding one more link) and the middleman hands the content off to the consumers. This market architecture has N+1 links or a total cost of this scheme is 2*(N+1). Since the middleman can server multiple producers the chance for coordination expertise to condense is generally higher in this scenario. Everybody, except the middleman, see their costs drop to 1. Assuming the producer doesn’t mind being intermediated he has incentive to shift to this model. His bandwidth costs drop from N to 1, and he doesn’t have to become an expert on coordinating distribution. The middleman becomes a powerful force in the market. That’s a risk for the producers and the consumers. It is possible to solve problems like these without a middleman, instead we introduce exchange standards. Replacing the middleman with a standard. Aside: Note that the second illustration, Consumers coordinate, is effectively showing a standards based solution as well. We might use a peer to peer distribution scheme, like Bit Torrent for example. To use Bit Torrent’s terminology the substitute for the middleman is called “the swarm” and the coordination is done by an entity known as the “the tracker.” I didn’t show the tracker in my illustration. When bit torrent works perfectly the producer hands one Nth of his content off to each of the N consumers. They then trade content amongst themselves. The cost is approximately 2 units of bandwidth for each of them. The tracker’s job is only to introduce them to each other. The coordination expertise is condensed into the standard. The system is robust if the average consumer contributes slightly over 2 units of bandwidth to the enterprise, it falls apart if that median falls below 2. A few consumers willing to contribute substantially more than 2N can be a huge help in avoiding market failure. The producer can fill that role.

It is possible to solve problems like these without a middleman, instead we introduce exchange standards. Replacing the middleman with a standard. Aside: Note that the second illustration, Consumers coordinate, is effectively showing a standards based solution as well. We might use a peer to peer distribution scheme, like Bit Torrent for example. To use Bit Torrent’s terminology the substitute for the middleman is called “the swarm” and the coordination is done by an entity known as the “the tracker.” I didn’t show the tracker in my illustration. When bit torrent works perfectly the producer hands one Nth of his content off to each of the N consumers. They then trade content amongst themselves. The cost is approximately 2 units of bandwidth for each of them. The tracker’s job is only to introduce them to each other. The coordination expertise is condensed into the standard. The system is robust if the average consumer contributes slightly over 2 units of bandwidth to the enterprise, it falls apart if that median falls below 2. A few consumers willing to contribute substantially more than 2N can be a huge help in avoiding market failure. The producer can fill that role.