I have a rule of thumb that data in motion is more interesting than data at rest. Both from a business architecture point of view and when designing, managing, or diagnosing a system. Thus my interest in middlemen, who intermediate transaction flows. Thus my interest in the ping problem, aka how to do forward chaining on the Internet. Content isn’t king, the hubs are king. The conversation is more important than the library.

Recently I’ve been kicking the tires on a bit of technology that goes by the name AMQP, or Advances Message Queue Protocol. It is for all intents an open standard for building your enterprise message bus. There are a couple reasonably mature open source implementations at this point. Active communities. Active standards process which, and this is important, are driven by the users of the system and haven’t yet been coopt’d by the vendors.

Regularly through out my life I’ve worked on real time control systems. So I have big tangled set of design patterns for how those get built. Big sophisticated industrial control systems full of three problems I find interesting. They are very heterogenous, they are all about data in motion, and they feature power-law distributions in the event rates. Recently I’ve been finding it amusing to observe how much cloud computing is full of the same tangles. There is a hell of lot of commonality across these problems: real time control, enterprise message bussing, managing all the moving parts in your cloud computing application.

To stay sane you can say there are three design patterns that stand atop your message bus. Broadcast, enqueuing work in progress, and the ever popular remote procedure call.

Work in progress Q’s are everywhere. You see them at the bank when you Q up for a teller, at the grocery story with the check out lines, or when you s stick your mail into the mailbox on the corner. There is a nice term of art: “Fire and Forget”. When things go according to plan you slip your mail into mailbox, the magic happens, and your valentine gets your card. Fire and forget is great because you can decouple the slow bits from the quick (user response time) bits. It also enables separation of concerns (you don’t have to run a postal system). It also is trivial to add scaling (just hire a few more clerks, or spin up a few more computers). So one thing you can do with AMQP is set up virtual simulations of the queue at the bank. And AMPQ implementations provide dials you can adjust to decide how reliable (v.s. fast) you want that to be. For example you might set the dials to assure the messages are replicated across disk drives in multiple geographic locations. You might set the dials so the messages never leave wire and ram. There is a of latency/reliability trade off here.

The fire and forget pattern doesn’t work of course. We all love to worry. You buy something online. You fire off your order and then you forget about it. Ah, no you don’t. You put it on the back burner. You get a tracking number. From time to time you poll to see how it’s going. Sometimes the vendor sends you status reports. Sometimes he sends you bad news. While AMQP has lots of nice and necessary mechanism it doesn’t have tools for handling the range of semi-forget modalities: monitoring, tracking, status reporting, raising exceptions. (As an aside, it is interesting to tease apart the attempts to address these found in SMTP.)

In any case systems built around the Queue of Tasks design pattern are everywhere. This is the model seen in factories for everything: batch production, forms processing, continuous production lines, unix pipelines, etc. etc. I once heard a wonderful story about a big factory at the end of a pipeline. Pretty regularly the sun would come out and warm the pipeline. At that point a vast slug of vile material would rapidly explode out of the pipe and into multi-million dollar holding tank. They wished the tank was larger.

In any case systems built around the Queue of Tasks design pattern are everywhere. This is the model seen in factories for everything: batch production, forms processing, continuous production lines, unix pipelines, etc. etc. I once heard a wonderful story about a big factory at the end of a pipeline. Pretty regularly the sun would come out and warm the pipeline. At that point a vast slug of vile material would rapidly explode out of the pipe and into multi-million dollar holding tank. They wished the tank was larger.

When you build realtime control systems you often arrive after the fact. The factory already is chugging along and your goal is to try and make it run better, faster, etc. The first thing you do is try to get some visibility on what’s going on. At first you thrash around looking for any info that’s available. In software systems we look at the logs. We write code to monitor their tails. We tap into the logging system, which is actually just yet another message bus.

That logging and monitoring are similar but different is, I find, a source of frustration. It is common to find systems with lots of logs but very little monitoring. What monitoring is going on is retrospective. Online, live, monitoring is sufficiently different from logging; that it drives you toward a different architecture. It is one of the places that data in motion becomes distinct from data at rest.

One of the textbook examples of AMQP usage is the distribution of market data. A vast amount of data flows out of the worlds financial markets. Traders in those markets need to tap selectively into that flood so their trading systems can react. Which is exactly what you need when doing real time control. The architecture for this pushes the flood of data, contrast to what is commonly seen in log analysis. There you see a roll up of logs into an aggregated, archival, set where offline processes can then do analysis.

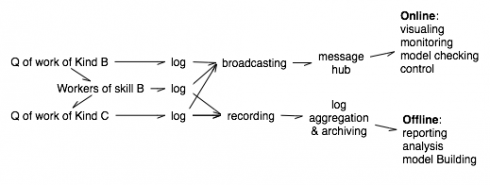

The distinction between data at rest v.s. data in motion is identical to the distinction between recording and broadcasting. I find you need both. In real time control systems it tends to be common to find good infrastructure for the broadcast. In software system I seem to encounter good infrastructure for the recording side. What the drawing demonstrates is how many more moving parts a system accretes as soon as you start to address these issues. In the drawing our simple ping-pong between workers and task queues now has now sprouted a fur of mechanism so we can get a handle on what it’s doing. Each component of the system needs to participate in that. Each part has to cough up a useful log; which we then have to capture, record, and broadcast. Standardizing all that would be good; but it tends to be at minimum tedious at at worse intractable. First off, it is a lot to ask of any component that it enumerate all possible situations it might fall into. Exceptions, and hence logging, are all about the long tail. Secondly a good log is likely to run at many times the frequency of the work; i.e. when the worker does one task he will generate multiple log messages.

The long-tail nature of log entries means that our online monitoring, etc. has to be very forgiving and heuristic. One common trick for solving the problem that logging runs at higher rates than then work is to situate this part of the system at a lower-latency less-reliable point when you set the dials on your messaging hub. All that said it’s often a problem that these things get build, and spec’d out, late in the game.

AMQP has some nice technology for implementing that messaging hub for the broadcasting side of things. One of the core abstractions in AMQP is the exchange, a place that accepts messages and dispatches them. Exchanges do not store messages; which is done by queues. In a typical broadcast setup market data floods into an exchange where different consumers of that have subscribed to get what they are interested in.

For example I’ve recently been playing around with a system for keeping a handle on a mess-o-components running at EC2. I flood the logs from every component to a single AMQP exchange which I call the workroom. For example to get the machine’s syslog I add a line to syslog’s configuration so it routes a copy of every logging message to a unix pipe. On the other end of that pipe I run a python program that pumps the messages to the workroom. These messages are labeled with what AMQP calls a routing key, for example “log.syslog.crawler.i-234513.” At the same time I have daemons running on each machine that are mumbling at regular intervals into the work room messages about swapping, process counts, etc. If I want to listen on on all the messages about a single machine then I subscribe to the work room asking for messages who’s routing_key match “#.i-234513.#” or, if I want to listen in on all the syslog traffic can tap in “#.syslog.#’ messages. That for example revealed that one of my machines was suffering a dictionary attack on it’s ssh port. This framework makes it easy to write simple scripts that raise the alarm if there is a sudden change in the swapping, or process counts.

One thing I like to do is to attempt to assure that every component mumble a bit. That way I can listen to the workroom to see who’s gone missing; and as new components are brought on line I can notice their arrival. I like to use jstat, vmstat, even dtrace, to get the temperature of various system components. It’s nice to know when that java process descends into a garbage collection tar pit.

The workroom message hub is a huge help getting some modularity into the system. It’s easier to write single purpose scripts that tap into the workroom to keep an key on this or that aspect of the system.

Good stuff. I have also been thinking about the use of AMQP topic exchanges for operations monitoring and alarming.

A single message can be demultiplexed into multiple queues and hence to multiple consumers that can look at it at a slightly different angle. For example, a message published with “server1.disk.nearfull” routing key can be sent to a consumer that will initiate cleaning up old log files on server1, and at the same time can be sent to consumers tasked with monitoring applications running on server1, in case a failover needs to be initiated. All magically happening out of the box, thanks to AMQP.

If you have any code on this and can open source it, I would be very interested in contributing, and in fact I suspect there will be many people who will be interested in something like this.

Nicely put.

I’m always a little surprised by people who build systems of any kind and then fail to stick in the real time, as it happens, tail the log file and stare at until it makes sense kind of monitoring that’s my first instinct. There are sometimes anguished cries from “analytics” people on one of the web advertising lists whose data analysis vendor isn’t even keeping up with the pace of daily reporting. (daily wtf? if it matters, you have some sense for what it’s doing while the market is open for whatever market you are in)

I think of the ‘long tail’ of log file analysis like deep-sea fishing: i’m looking for that very rare, highly valuable fish among the flood of common breeds and noisy schools. You could wait for days without happening to see one going by, but of course, knowing its nature helps a lot in where (and when) to look.

Pingback: Ascription is an Anathema to any Enthusiasm › Cascades of Surprise

I’m also interested in MQ for monitoring – I started writing a ‘dashboard’ application for our disparate monitoring sources (everything from Cacti to tiny perl scripts I wrote), and realised that polling was going to get painful quickly.

Now I’m looking at this mumbling approach instead, and can easily write small specialised clients to look for patterns, and for visualisation with known-latest data, with Cacti spitting out little data announcements as it collects them.

Pingback: RabbitMQ OpenSources Messages Service « TuXxX Blog

Pingback: Continuous v.s. Batch: The Census | Ascription is an Anathema to any Enthusiasm